Route data AWS S3

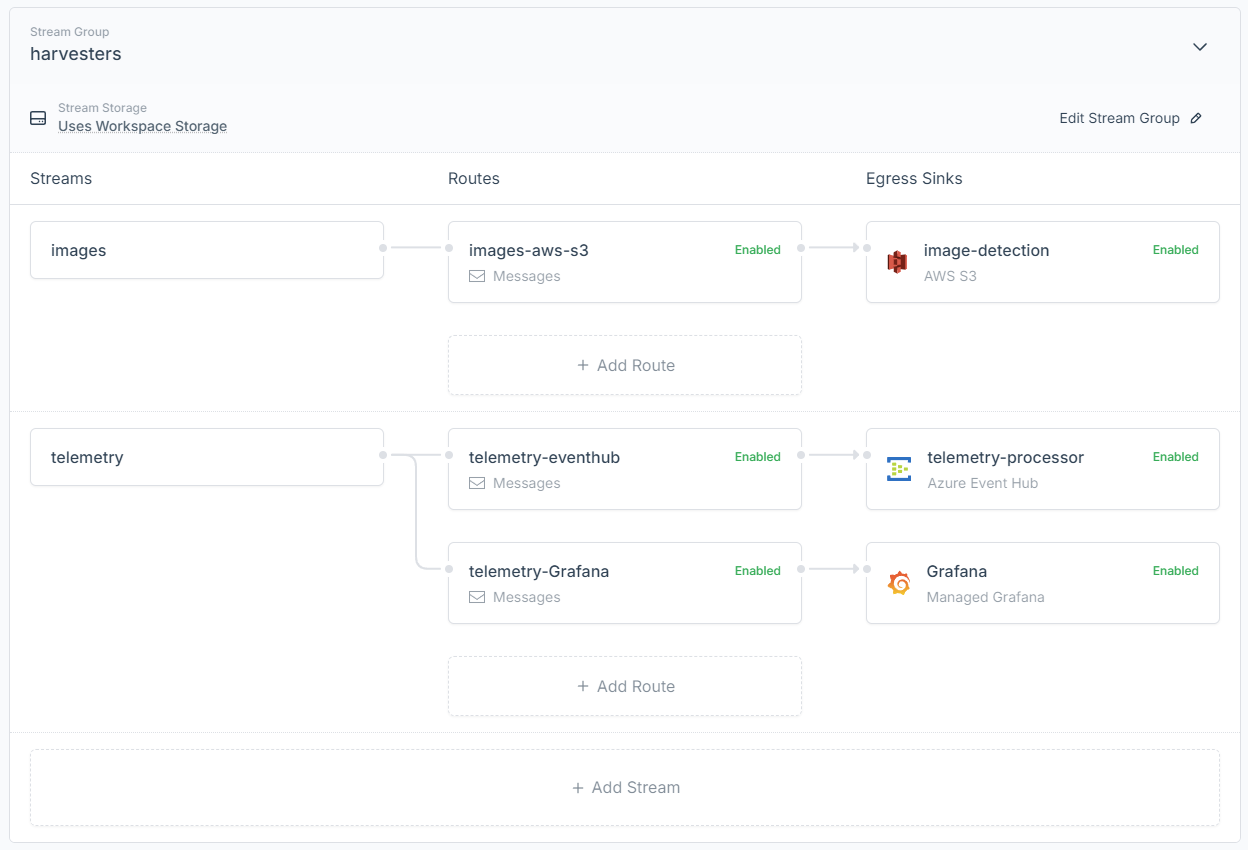

Spotflow customers can now seamlessly route data streams from devices into AWS S3 buckets.

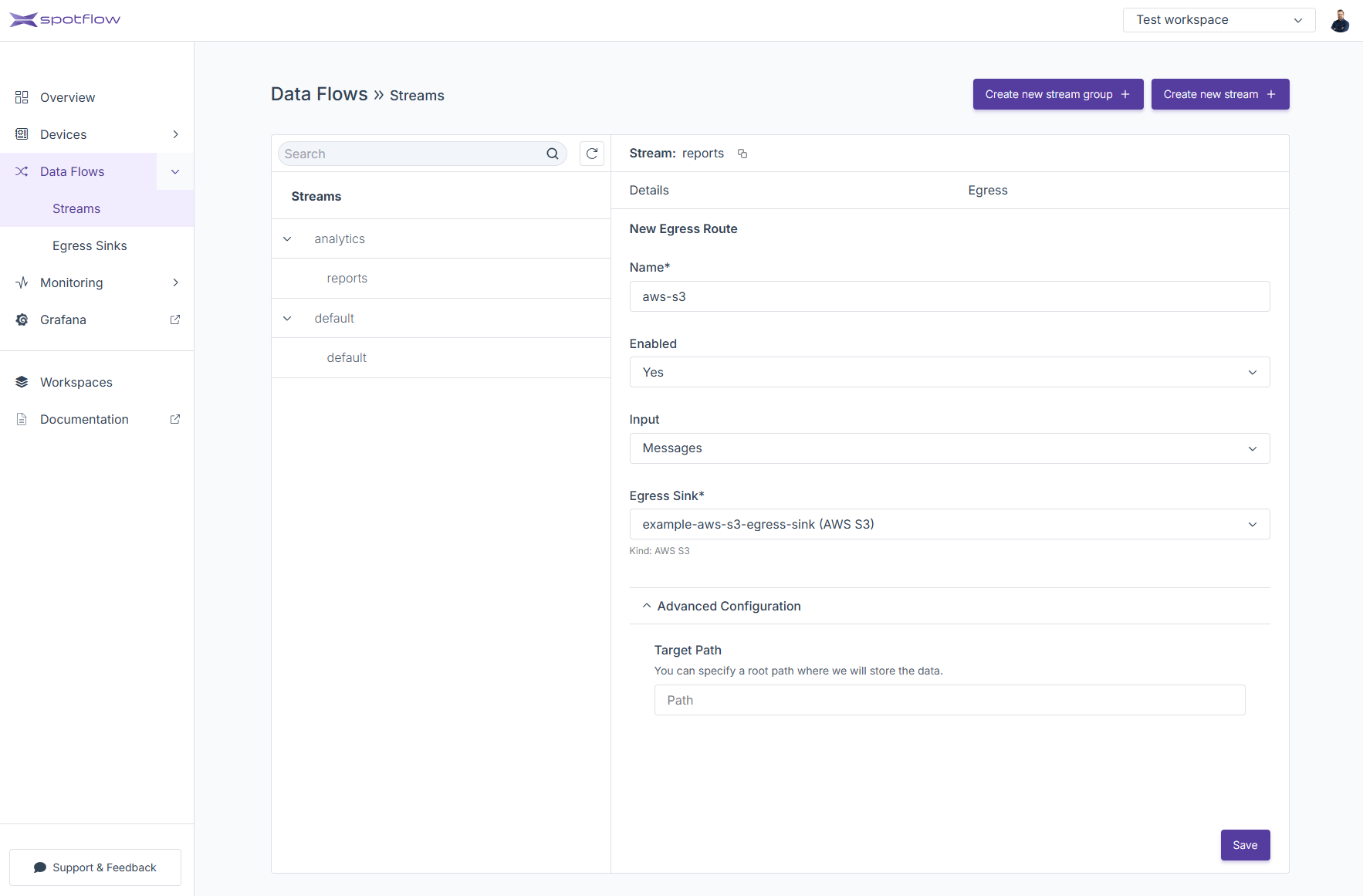

This feature is provided as a new Egress Sink kind to which both new and existing streams can be routed.

See detailed documentation in Amazon S3 Egress Sink page.

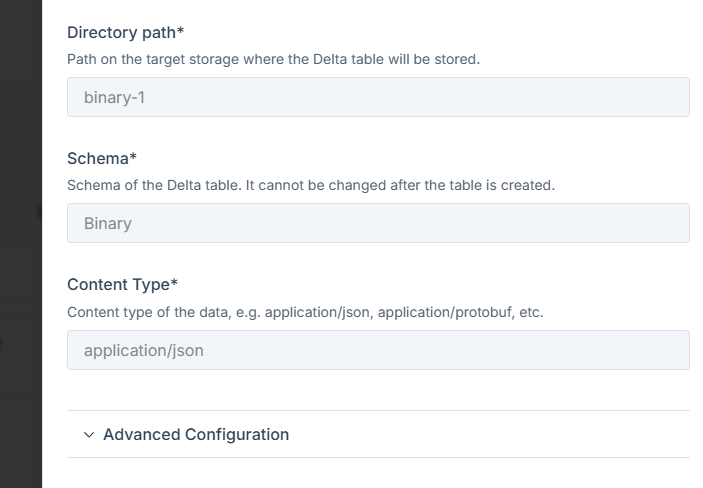

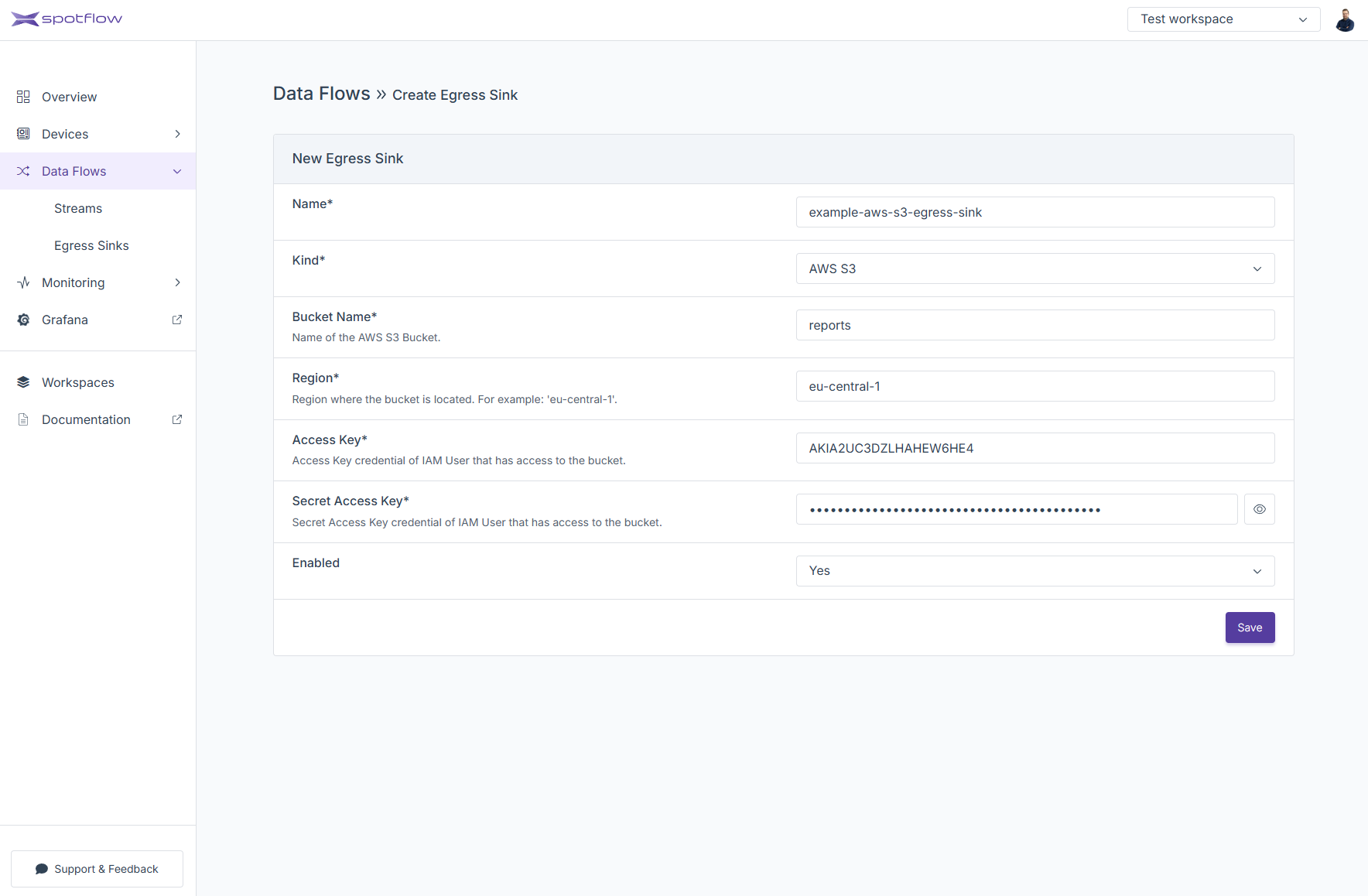

The Target S3 bucket is specified via bucket name, region, and IAM user access key.

Optionally, a static prefix of the target path can be added.

The new egress sink can be configured via Portal, CLI, or API.

Previously, this use case was possible only by writing custom processors consuming data through one of the existing egress sinks, such as Azure Event Hub.

This option is still viable for existing and new customers, but we recommend migrating to the new AWS S3 egress sink.

Creating Egress Sink

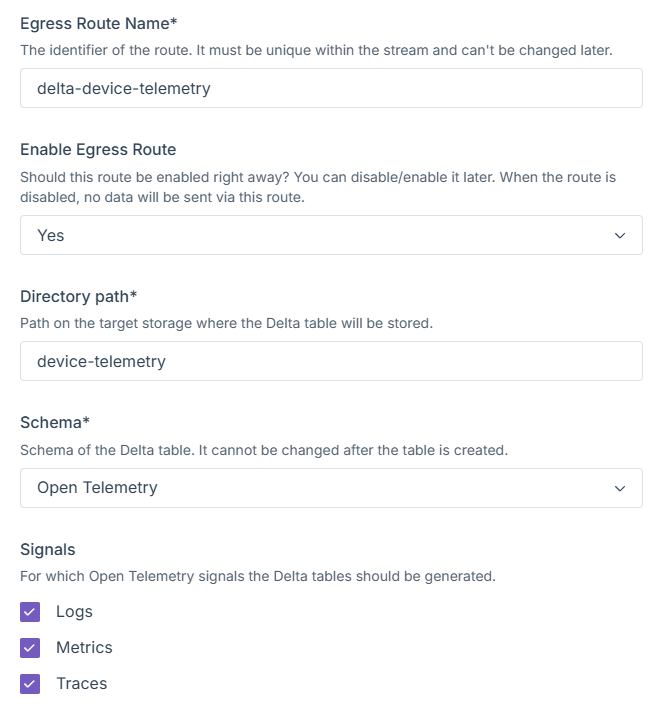

Creating Egress Route